Sri Ray-Chauduri and Esme Symons, Technology and Engineering editors

If you think the documentary Coded Bias sounds like it’s only for technology or engineering enthusiasts, think again. If you have ever used social media, bought something online or walked down a street of a big city, then you need to watch this film!

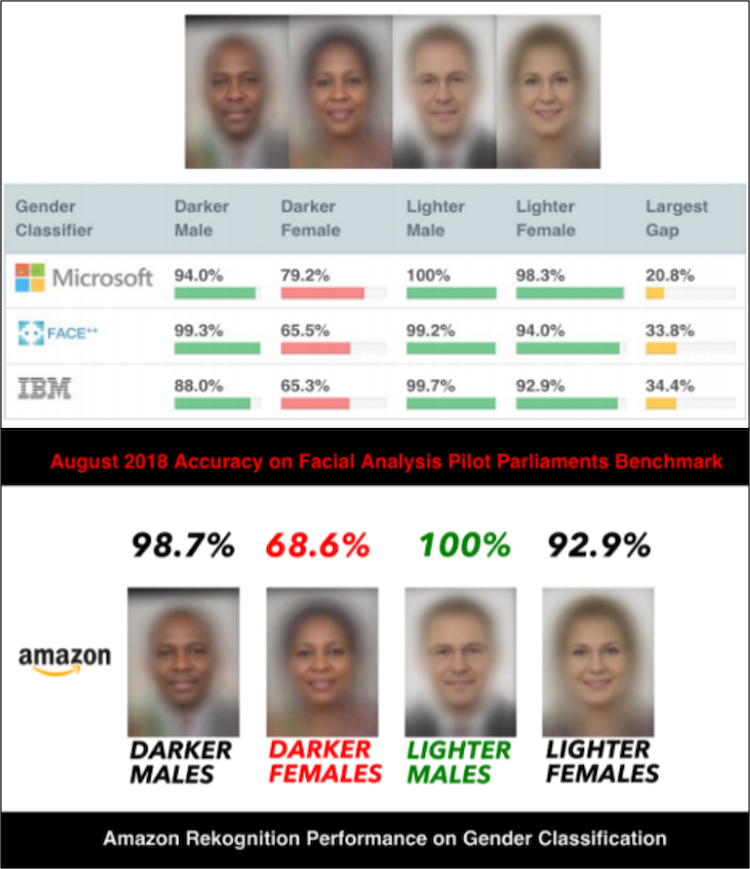

The documentary explores bias in computing algorithms. It begins with MIT graduate student Joy Buolamwini’s discovery that some facial recognition technologies (FRTs) couldn’t accurately detect faces that were female or had darker skin, including her own. Buolamwini dug deeper and found the datasets used to program these technologies mostly consisted of male and lighter skinned faces. Unfortunately, this finding turns out to be just the tip of the iceberg. Through powerful clips from an array of female leaders in the field and sobering real-world examples, the film takes the audience on a compelling journey through the hidden perils of algorithmic bias.

How does bias infiltrate computing algorithms?

In simple terms, an algorithm is a procedure or set of instructions used to perform a computational task or solve a problem. One method of programming a computer is to give it instructions on what to do. Alternatively, you could supply it with large amounts of data and let the machine figure out how to classify the data by processing the available information. This kind of computer ‘learning’ method works well, but programmers need to proceed carefully. Zeynep Tufeki, a social technology expert interviewed in the film, says “We don’t really understand why it works – it has errors we don’t really understand…and the scary part is because it’s machine learning, it’s a black box to even the programmers”. Microsoft’s 2016 chatbot experiment on Twitter (named Tay) is a notorious case in point. Within hours of release, Tay digested enough data from the Twitterverse to become a deplorable tweeter, boldly expressing a bevy of inaccurate and unimaginable prejudices. The importance of thinking about the data and examples used to teach machines patterns should not be underestimated.

We naturally associate artificial intelligence (AI) with the future, but Buolamwini emphasizes that “AI is based on data and data [are] a reflection of our history. So, the past dwells within our algorithms.” These data expose and proliferate inequities that have always existed. Mathematician and author Cathy O’Neil, who is also prominently featured in the film, believes it’s a problem if society puts its faith in the idea of a fair and neutral algorithm while ignoring the intrinsically biased data we feed it. “The underlying mathematical structure of the algorithm is not racist or sexist, but the data embed the past…and we all know that humans can be unfair, that humans can exhibit racist or sexist or…ableist discriminations.” Transparency about how data are used, and what they are used for is critical, but so is the question of who codes the algorithm. We all carry unconscious biases, and ensuring the people involved in algorithm design accurately reflect the demographics of our current society can help address these types of discriminations in AI.

Automating hidden inequities

Buolamwini and her colleagues demonstrated bias in the FRTs they tested, but what other pitfalls are out there? The film reveals how we are constantly interacting with automated decision-making algorithms that can have a major impact on our lives beyond what we see in our social media feeds. Well-documented examples include: racial bias in tools allocating healthcare in the United States (US), bias in sentencing within the US and Canadian justice systemsand gender bias in a now defunct Amazon AI hiring tool. In 2019, even Apple co-founder Steve Wozniak openly shared concerns on Twitter over possible algorithmic bias with the company’s credit card.

O’Neil, who also worked in the finance industry, says if we think of machine learning as a scoring system that estimates the likelihood of what a person will do in a particular scenario (such as paying back a loan or getting a credit card), society must consider the power imbalance in the structure of the decision making. She stresses there is no recourse for people “…who didn’t get credit card offers to say ‘I’m going to use my AI [or algorithm] against the credit card company’. People are suffering algorithmic harm and not being told what’s happening to them and there is no appeal system, no accountability.”

Watch the trailer:

Accountability in the age of automation

Buolamwini founded the Algorithmic Justice League (AJL) after her realization that “oversight in the age of automation is one the largest civil rights concerns.” The AJL aims to lead a cultural movement towards equitable and accountable AI, calling on everyone to “become an agent of change” by running educational workshops or reporting cases of algorithmic inequality for investigation.

Ultimately, enforceable legal frameworks are needed. Right now, several cities in the U.S. have banned the use of FRTs in their jurisdictions, and legislation to ban the federal use of FRTs was introduced in 2020. An Algorithmic Accountability Act, requiring companies to conduct algorithmic impact assessments, will also be re-introduced in Congress this year.

The situation is somewhat different in Canada. Professor Christian Gideon, an expert in law and AI at the University of Calgary says that “although federal [and] provincial privacy laws have been invoked to fault the use of FRT [such as Clearview AI], these laws are general privacy laws [and do] not specifically deal with FRTs…[but] there are many other problems with FRT outside the scope of privacy laws [such as] racial and gender bias associated with the technology. These are Canadian Charter of Rights and Freedoms issues and…why Canada should follow the trend in the US towards a more holistic regulation (if not ban) of the technology” and suggests Canadians “can advocate for such policy and legislative accountability through their elected representatives.”

The information age has provided advances of immense benefit to the world, but we should not forge ahead blindly. Coded Bias emphasizes that decisions about algorithm design cannot simply be mathematical; they must be ethical as well. You can stream Coded Bias online or arrange for a screening by visiting Women Make Movies.

~30~

It was useful

thank you