By Nick Brown, new science communicator

As permafrost research heats up, national and territorial geological surveys are digging up old data to help answer new questions about Canada’s cold regions.

The need for permafrost data in Canada

Permafrost – ground that is colder than 0°C for at least two years – is changing as Earth’s climate warms. Across northern Canada, the effects are already apparent: slow-moving landslides threaten highways, buildings are sinking and collapsing, and traditional food sources are threatened. The annual cost of permafrost thaw in the Northwest Territories has been estimated at $51 million. In the Yukon, the additional maintenance cost for a single highway was estimated at $200,000 each year.

To anticipate and mitigate the changes and their impacts, scientists use computer models to predict how ground temperature and ice content will change well into the next century.

An accurate representation of permafrost in a model requires detailed descriptions of the ground: properties that include how well it stores or conducts heat or how the moisture content changes with depth. Multiple years of ground temperature observations are also needed to evaluate and improve the models.

However, these data aren’t always available.

Collecting new permafrost data

A permafrost drill core from the Northwest Territories, Canada, showing alternating bands of ice and sediment. As the lenses of ice melt, it can cause the ground to settle. This segment was collected from more than four metres below the ground surface. Image source: Gruber et al. 2018.

Because permafrost occurs underground, data about permafrost are difficult and costly to collect. Scientists use drills to extract long plugs, or cores, of the subsurface and note the ice in the ground – how much there is and whether it is dispersed or intermittent or occurs in thick bands.

These characteristics control how permafrost is affected by and responds to climate change.

Once they’ve drilled a borehole, scientists insert instruments to record ground temperatures at regular intervals, sometimes collecting measurements every hour.

This is exactly the kind of information permafrost modellers need.

However, once these data have been used for a particular experiment, they might not be shared in a way that is easily accessible for other researchers. In fact, they may not be shared at all.

Digging into the archives

In Whitehorse, Panya Lipovsky, a surficial geologist with the Yukon Geological Survey, has been working to change that. She is working with colleagues from the Yukon government and from Yukon College to create a permafrost database for the Yukon.

“Initially we just formed this working group,” she says, “and we just sort of brainstormed about what’s out there and who’s willing to share what they have, and created sort of a central repository to dump all the raw data on.”

The project grew from there.

The effort is part of a Canada-wide trend to make historic permafrost datasets more available. Both the Northwest Territories Geological Survey and the Geological Survey of Canada are also developing web-accessible databases for historic permafrost data.

One of the biggest limitations to these initiatives is resourcing. Despite the importance of these data, typically neither government budgets nor research grants prioritize them.

It also takes time, effort, and the right personnel to organize and standardize the historic data.

“It’s been a crazy amount of work,” Lipovsky says. “But it’s fun to have something that’s sort of alive and … continuing to improve.”

From data trickles to a data flood

The need for better data sharing has been brought on, at least in part, by the relative ease with which data are now collected.

Instruments like this one, which record measurements directly onto chart paper, were common before digital dataloggers became available. Image used under CC public domain licence.

University of Ottawa professor Antoni Lewkowicz has been collecting permafrost data since the late-1970s. Early in his career, “they would have […] a piece of paper that, when it was struck by a needle, would leave a line so you would end up with a chart,” he says. “At the end of the summer, you’ve got all these charts that you carefully take home in your checked baggage.”

Even after dataloggers — small computers that automate the recording of observations — were introduced in the early 1980s, the amount of data generated was modest and serious challenges remained.

“It still wasn’t easy to transform that information into results,” Lewkowicz says. “It still took a lot of manual work to do so. And I know that because we didn’t trust things. We would actually go through in the field and write down all the numbers that were on our CR 21 [one of the early dataloggers] because […] what if something went wrong and all of it was lost?”

Now, however, the volume of data being created is enormous. The Northwest Territories Database alone contains more than 22 million ground temperature observations from 1994 to present day and descriptions of more than 15,000 drill holes.

The changing culture and practice of data sharing

Historically, Lewkowicz says, if researchers shared data, it was through personal contacts with other researchers who might be interested.

“They’re working on another island, and you’re working on this island,” he says. “Maybe you’ve got something that you can say to each other.”

He also says that, even then, sharing data was “virtually impossible because people were using unique techniques. So you design the technique to collect measurements — collect data for a specific project — and those data hopefully will answer the question you’re asking. But it doesn’t mean that they’re of any use to anybody else.”

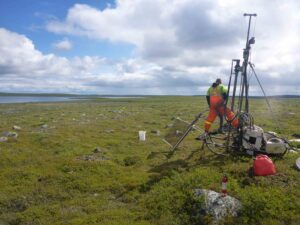

A lightweight drill used to collect permafrost cores. Image source: Gruber et al. 2018.

Research culture and practice have since shifted to include data sharing and publication. The Tri-Council, one of Canada’s main research-funding bodies, strongly recommends making research data available in the public domain — that is, not restricting use of the data through exclusive intellectual property rights. Guiding principles have been established that describe how scientific data ought to be shared. Known as the FAIR principles, they recommend that data be findable, accessible, interoperable and reusable.

The third principle, interoperability, describes the ease with which multiple datasets from different sources can be combined. This can be achieved by using consistent terminology and file formats that don’t require proprietary software and by providing thorough descriptions of how the data were collected. When working with so many sources, even small differences hamper dataset integration.

The recent push to publish and share datasets has been brought about by the increase in demand for permafrost data and their increasing abundance. It is changing the landscape of permafrost data and research in Canada.

Making historical and current permafrost datasets available to climate modellers is essential to helping Canada’s northern communities predict and adapt to the changes they face in the coming decades.

Feature image: Historic buildings in Dawson City, Yukon, where permafrost change has begun to damage infrastructure. Image credit Bo Mertz, CC BY-SA.

Nick Brown is a data scientist with NSERC PermafrostNet, where he develops tools to support permafrost simulation and data handling and also promotes the adoption of standards for permafrost data. He wrote this article as part of Science Borealis’s Winter 2022 Pitch & Polish, a mentorship program that pairs students and researchers with one of our experienced editors to produce a polished piece of science writing.

Read more about Pitch and Polish

One thought on “The changing landscape of permafrost data”

Comments are closed.