Nahomi Amberber, Policy & Politics co-editor

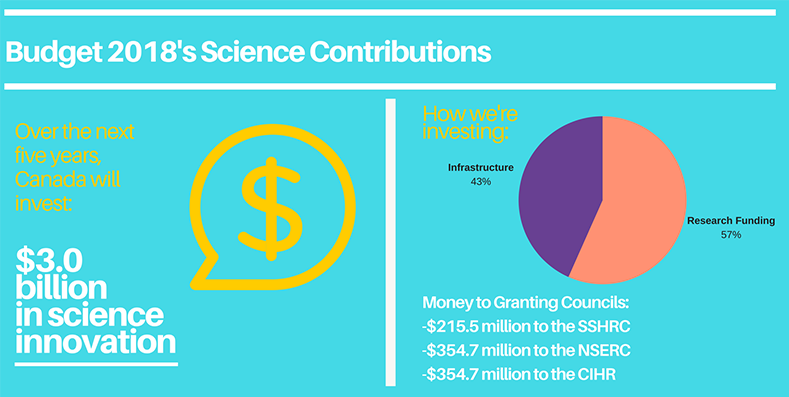

On February 27, 2018, the Government of Canada released a budget that many in the scientific community described as a “win’ because it dramatically increased funding for fundamental research. For those following science policy in Canada, this decision was not surprising, because it had been years in the making.

In June 2016, Minister of Science Kirsty Duncan established a panel to assess the state of scientific research and innovation, including the three main granting councils (Canadian Institutes of Health Research (CIHR), Natural Sciences and Engineering Research Council (NSERC) and Social Sciences and Humanities Research Council (SSHRC)). Led by Dr. David Naylor, the panel released its findings in April 2017, recommending an increase in funding and a new advisory council to assist the federal agencies.

The government responded by increasing funding for non-government investigator-led research and infrastructure, although not to the $1.3 billion a year recommended by the panel. For comparison, the 2017 budget made no mention of the three granting councils, and the 2018 increases to the granting councils’ budgets are the largest to date.

Source: Government of Canada, Budget 2018. Infographic: Nahomi Amberber

As more money generally means more opportunities, it’s worth looking at how Canada’s granting councils determine which researchers deserve a piece of the 2018/2019 $925 million research pie.

Like scientific journals and magazines, the three granting councils use a peer-review process to identify the eventual grant recipients. Peer review involves other researchers (not always in the same field) ranking the proposals based on several factors, including the quality and feasibility of the project and its impact to society. Unlike journals, grant peer reviews also assess the academic excellence of the applicants to determine whether they are the right people for the job. The result? A single blind process in which the reviewers know who the applicants are, but the applicants don’t know who reviewed their proposals.

While reviewers who know the applicant must declare their conflicts of interest to remove any appearance of preference, unconscious biases can still sneak in. Also known as implicit bias, this can be for or against a variety of characteristics that have no bearing on the merit or excellence of the applicant.

In the first study of its kind, in 1997, Swedish researchers pointed to sex and affiliation with reviewers as applicants’ features that affected their ranking. And, although there has been little research into this process since then (due to confidentiality issues surrounding the applicants’ and reviewers’ identities), a Canadian team led by Dr. Robyn Tamblyn of McGill University sought to analyze the same problem. In a recent study published in the Canadian Medical Association Journal, Dr. Tamblyn, Director of the Institute of Health Services and Policy Research at the CIHR, and her team show that biases exist here, too.

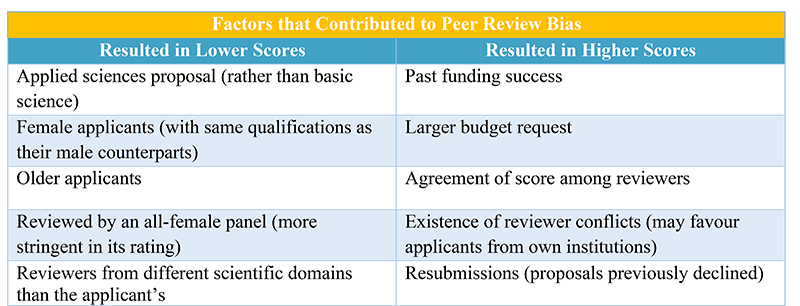

Dr. Tamblyn’s team used data from the 2012–2014 CIHR funding applicants. Committees of 10–15 members ranked applications on a scale from 1 to 4.9 on the concept (25%), approach (50%), and expertise of the applicant (25%). The researchers tried to determine which variables, such as the applicant’s gender and scientific field, influenced the proposal’s score, regardless of the applicant’s scientific productivity.

Factors that impacted the final score of peer reviewed science proposals when adjusted for scientific productivity. Source: Assessment of potential bias in research grant peer review in Canada, 2018.

In addition to the biases shown in the table above, Tamblyn’s team showed that “female applicants who had past success rates equivalent to male applicants (previous awards and number of publications) received lower application scores, the difference being greater in applied science applications and as the past success rates increased.” In other words, female applicants were not given the same credit for their past success in new applications that male applicants were, especially if their research was in the applied sciences.

When I asked Dr. Tamblyn if she thought anyone in the scientific community would be surprised by the impact of the unconscious biases shown, she said no. “Other agencies have identified these kinds of biases and, if you talk to people in the community, I think they’ve had some concerns about there being issues in the peer-review process. But I think it’s only now that we’re having more access to the data and they’re doing more science and investigation [on this issue].”

As mentioned, little data are available for this kind of research because of confidentiality issues. Tamblyn also pointed out that those traditionally at the heads of these granting councils usually do not have the skillset required to analyze this type of data. But researchers are slowly overcoming these limitations. As Tamblyn says, “I think […] the idea of wanting to monitor the nature of our scientific funding and evaluate its impact has become more front and center than it ever has been.”

Even as more researchers are looking at the data, policies and tools are being put in place to minimize unconscious bias. Granting councils now refer reviewers and adjudicators to an online unconscious bias training module and have developed both policies centred on training reviewers and clearer guidelines for scoring proposals.

Some might argue that these practices can’t completely mitigate bias, to which Dr. Tamblyn responds that there is another option: a completely open and transparent process.

“We’re going to say who the peer reviewers are and have viewings of the committee meetings so that anybody can look in and see what’s going on… That kind of transparency is a way of essentially [keeping] everybody on their best behavior”.

Much like the use of body cameras on police officers, the idea is that an increase in accountability would ensure that reviewers stick to guidelines more closely when discussions are out in the open. Having a record of meetings would also give granting councils and applicants more concrete evidence to prove or disprove grading inconsistencies if unconscious bias were suspected.

Canada’s approach to funding science is not as objective as we would like it to be. Our government must put forward policies to ensure that the science landscape is untainted by our own (unconscious) biases. Full transparency or not, it’s clear that Canada’s scientific community will now be watching a lot more closely.

~30~

Banner Image: rawpixel.com, CC0 License